Object Storage Service Redundancy (CVOS)

Note: Object Storage Service Redundancy is only available on Windows and Linux.

The Object Storage Service is used for storing objects, such as profile and event images, as well as ephemeral data, such as event reply messages.

The service can operate in a redundant configuration when you have multiple SAFR servers running. All redundant secondary servers are load-balanced by the primary server for all Object Storage Service requests it receives.

Local Object Storage vs Shared Object Storage

Local Object Storage

By default all redundant servers will save objects locally, and ask other Object Storage Servers for objects it does not have locally.

When you’re using local object storage, you will lose access to all objects that are only stored by an offline Object Storage Server until the server becomes healthy again. If that server’s objects are lost, and you do not have backups, they will be unrecoverable.

Backups must be run on every redundant server that has Object Storage enabled.

Shared Object Storage

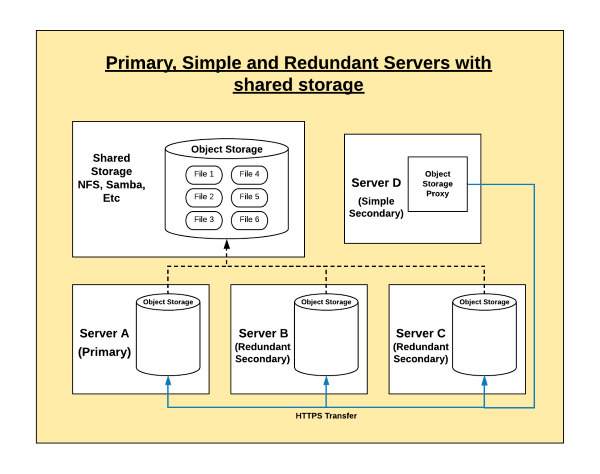

Using network storage (NFS, SMB, etc) provides a shared location for each server to save and retrieve objects from. This provides each Object Storage Server with access to all of the objects, rather than just objects saved to its local storage.

Shared storage also provides an easier backup process, as you only have to run it from the primary server.

Simple vs. Redundant Secondary Server Behavior

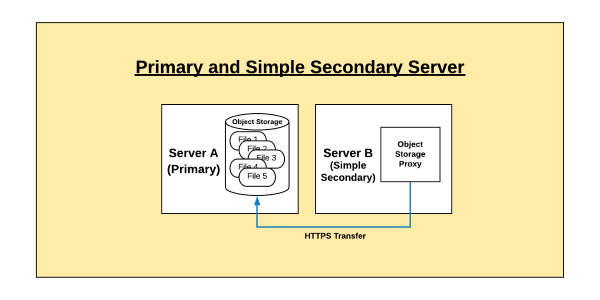

Simple Secondary Servers

On simple secondary servers, the Object Storage Service will operate in proxy mode.

Object Storage Servers operating in proxy mode will not attempt to use their own storage for objects, but will instead proxy the request to Object Storage Services that are running on either the primary server or on a redundant secondary server. If the redundant server it contacts doesn’t have the object, the contacted redundant server will ask all other redundant servers for the object.

The list of servers that run the Object Storage Service is stored in the database and updated every minute. If a host does not respond within a timeout, it is de-prioritized.

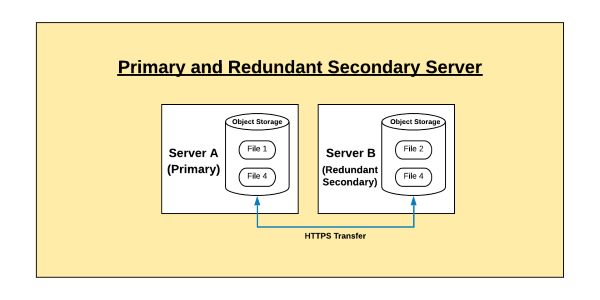

Redundant Secondary Servers (and the Primary Server)

On both the primary server and on redundant secondary servers the Object Storage Service stores new objects in storage.

When a server receives a request for a file it does not find in its storage, it will request the object from other Object Storage Servers via HTTPS, and return the object if found. (The same applies for DELETEs.) This allows multiple Object Storage Servers to operate without using shared network storage, with each server saving a subset of the total objects, and relaying requests for other objects to its neighbors.

Even when using shared network storage, sometimes a request will come in for a new object before it is visible to all systems on the shared storage. The Object Storage Service will ask all the other Object Storage Servers for the object until it finds one that has the object.

CVOS Redundancy Configurations

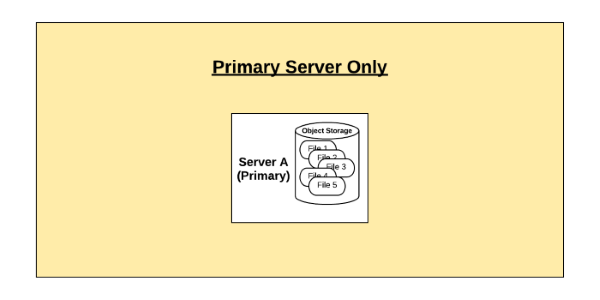

Single Server, Local Storage

All objects are stored on a single server, and no proxying requests occur.

Primary and Simple Secondary Servers, Local Storage

All objects are stored on a primary server. Any object requests sent to the secondary server are proxied back to the primary server.

Primary and Redundant Secondary Servers, Local Storage

Objects are saved to whichever server receives the POST request. Objects requested in GET requests are facilitated from either system object storage, if found, or requested from other Object Storage Servers if not.

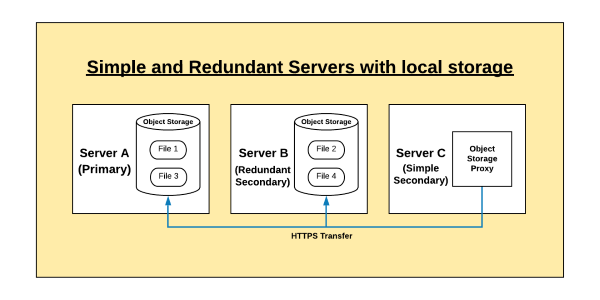

Primary, Redundant, and Simple Secondary Servers, Local Storage

Objects are saved locally on the host that services the POST request. GET requests are served from local storage if found, or requested from other Object Storage Servers if not. All requests to server C are proxied to servers A and B.

Primary, Redundant, and Simple Secondary Servers, Shared Storage

Objects are saved to shared storage on the host that services the POST request. GET requests are served from local storage if found, or requested from other Object Storage Servers if not. All requests to server C are proxied to servers A and B.

Migrating from Local to Shared Storage

If you start with local storage but later decide to move to shared storage, you will need to consolidate all of your objects to the new shared storage solution, delete the local copies, and then mount the shared storage to the right location. To do this, do the following:

- Back up both the primary and redundant secondary servers to ensure you have a full backup of all SAFR content.

- On Linux:

- Primary:

python /opt/RealNetworks/SAFR/bin/backup.py - Redundant Secondaries:

python /opt/RealNetworks/SAFR/bin/backup.py -o

- Primary:

- On Windows:

- Primary:

python "C:\Program Files\RealNetworks\SAFR\bin\backup.py" - Redundant Secondaries:

python "C:\Program Files\RealNetworks\SAFR\bin\backup.py" -o

- Primary:

- On Linux:

- Stop all primary and redundant secondary servers by using the stop command. This can be done by doing the following on each server:

- On Linux:

/opt/RealNetworks/SAFR/bin/stop - On Windows:

"C:\Program Files\RealNetworks\SAFR\bin\stop.bat"

- On Linux:

- Mount the new shared storage to a temporary location on primary and redundant secondary servers.

- Copy all files from the primary server and every redundant secondary server(s) to the temporary location of the shared storage. from within the following paths:

- On Linux:

/opt/RealNetworks/SAFR/cv-storage - On Windows:

C:\ProgramData\RealNetworks\SAFR\cv-storage

- On Linux:

- Delete or move the contents of the CV Storage folder on each primary and redundant secondary server as specified below.

- On Linux:

/opt/RealNetworks/SAFR/cv-storage - On Windows:

C:\ProgramData\RealNetworks\SAFR\cv-storage

- On Linux:

- Unmount the temporary location of the new shared storage.

- Mount the shared storage to the correct CV Storage location, or create a symlink to the shared storage location.

- Start the primary and redundant secondary servers by using the start command. On each server, do the following:

- On Linux

/opt/RealNetworks/SAFR/bin/start - On Windows

"C:\Program Files\RealNetworks\SAFR\bin\start.bat"

- On Linux

- Disable any automatic backups on redundant secondary servers.

- Now that you’re using shared storage, only the primary server needs to be backed up. If you have any automatic backups configured on secondary servers, disable them.

Backup and Restore with Local Storage

The SAFR backup and restore process when using shared network storage is straightforward - you just need to back up the primary server. This will back up all configs, database content, and Object Storage objects.

When using local storage, the objects are distributed to multiple servers, so the backup must be run on the primary server as well as any redundantly secondary servers.

The primary server should run a regular backup, while the redundant secondary servers run an ‘objects only’ backup. The difference is just the addition of the “-o” flag to the backup script.

When restoring multiple backups, you can restore them all to the primary server, or you can restore the ‘object only’ backups back to the same servers that they were backed up from.

Backup

- On Linux

- Primary:

python /opt/RealNetworks/SAFR/bin/backup.py - Redundant Secondaries:

python /opt/RealNetworks/SAFR/bin/backup.py -o

- Primary:

- On Windows

- Primary:

python "C:\Program Files\RealNetworks\SAFR\bin\backup.py" - Redundant Secondaries:

python "C:\Program Files\RealNetworks\SAFR\bin\backup.py" -o

- Primary:

Restore

- On Linux

- Primary:

python /opt/RealNetworks/SAFR/bin/restore.py BACKUPFILENAME - Redundant Secondaries:

python /opt/RealNetworks/SAFR/bin/restore.py -o BACKUPFILENAME

- Primary:

- On Windows

- Primary:

python "C:\Program Files\RealNetworks\SAFR\bin\restore.py" BACKUPFILENAME - Redundant Secondaries:

python "C:\Program Files\RealNetworks\SAFR\bin\restore.py" -o BACKUPFILENAME

- Primary:

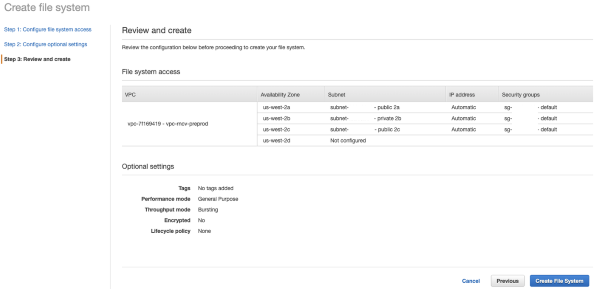

Example Shared Storage Configurations

Below are two example shared storage solutions.

Linux

Shared storage on Linux is very straightforward. Simply mount NFS or some other shared storage to the /opt/RealNetworks/SAFR/cv-storage location.

Stop SAFR.

/opt/RealNetworks/SAFR/bin/stop

Create NFS or some other shared storage location. The example below uses AWS EFS.

Edit

/etc/fstabto create a mount point of/opt/RealNetworks/SAFR/cv-storagefor your shared storage. The specific mount options should be provided by your specific storage service/device.fs-12345678.efs.us-west-2.amazonaws.com:/ /opt/RealNetworks/SAFR/cv-storage nfs4 nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,_netdev 0 0

Mount the NFS share.

sudo mount -a

Start SAFR.

/opt/RealNetworks/SAFR/bin/start

Windows

Windows cannot mount a shared storage location directly to the C:\ProgramData\RealNetworks\SAFR\cv-storage location. Instead it must create a symbolic link from that location to the shared storage location (either mapped network drive or SMB share).

- Stop SAFR.

"C:\Program Files\RealNetworks\SAFR\bin\stop.bat"

- Create NFS or some other shared storage location.

- Delete the existing

C:\ProgramData\RealNetworks\SAFR\cv-storageby runningrmdir /q /s C:\ProgramData\RealNetworks\SAFR\cv-storagein an administrative command prompt. Deleting the existing cv-storage allows you to create a symbolic link from the ‘cv-storage’ location to your shared storage location. Note: Be sure you are either doing this on a new system without any data, or that you’ve followed the migration steps above to consolidate your objects onto the new shared storage location. - Create the symbolic link from the

cv-storagelocation to your shared storage location. To do this, run one of the following commands in an administrative command prompt:- If you’re using a mapped network drive, run

mklink /d C:\ProgramData\RealNetworks\SAFR\cv-storage Z:\ - If you’re using an SMB share, run

mklink /d C:\ProgramData\RealNetworks\SAFR\cv-storage \\servername\share

- If you’re using a mapped network drive, run

- Start SAFR.

"C:\Program Files\RealNetworks\SAFR\bin\start.bat"